import time

import cv2

import threading

import requests

from ultralytics import YOLO

# Constant

VIDEO_FRAMES_TO_SKIP = 10 # the no. of frames we skip for each frame we will process

VIRTUAL_SENSOR_IP_ADDRESS = "192.168.0.135"

VIDEO_URL_USERNAME = "admin"

VIDEO_URL_PASSWORD = "admin"

VIDEO_STREAM_URL = f"http://{VIDEO_URL_USERNAME}:{VIDEO_URL_PASSWORD}@192.168.0.229:8081/video"

# Global variables for communication between threads

lock = threading.Lock()

obj_location = None

# Function to send an HTTP request with the location of the detected object

def send_request():

global obj_location

while True:

with lock:

x_percent = 0

y_percent = 0

w_percent = 0

h_percent = 0

if obj_location is not None:

x_percent, y_percent, w_percent, h_percent = obj_location

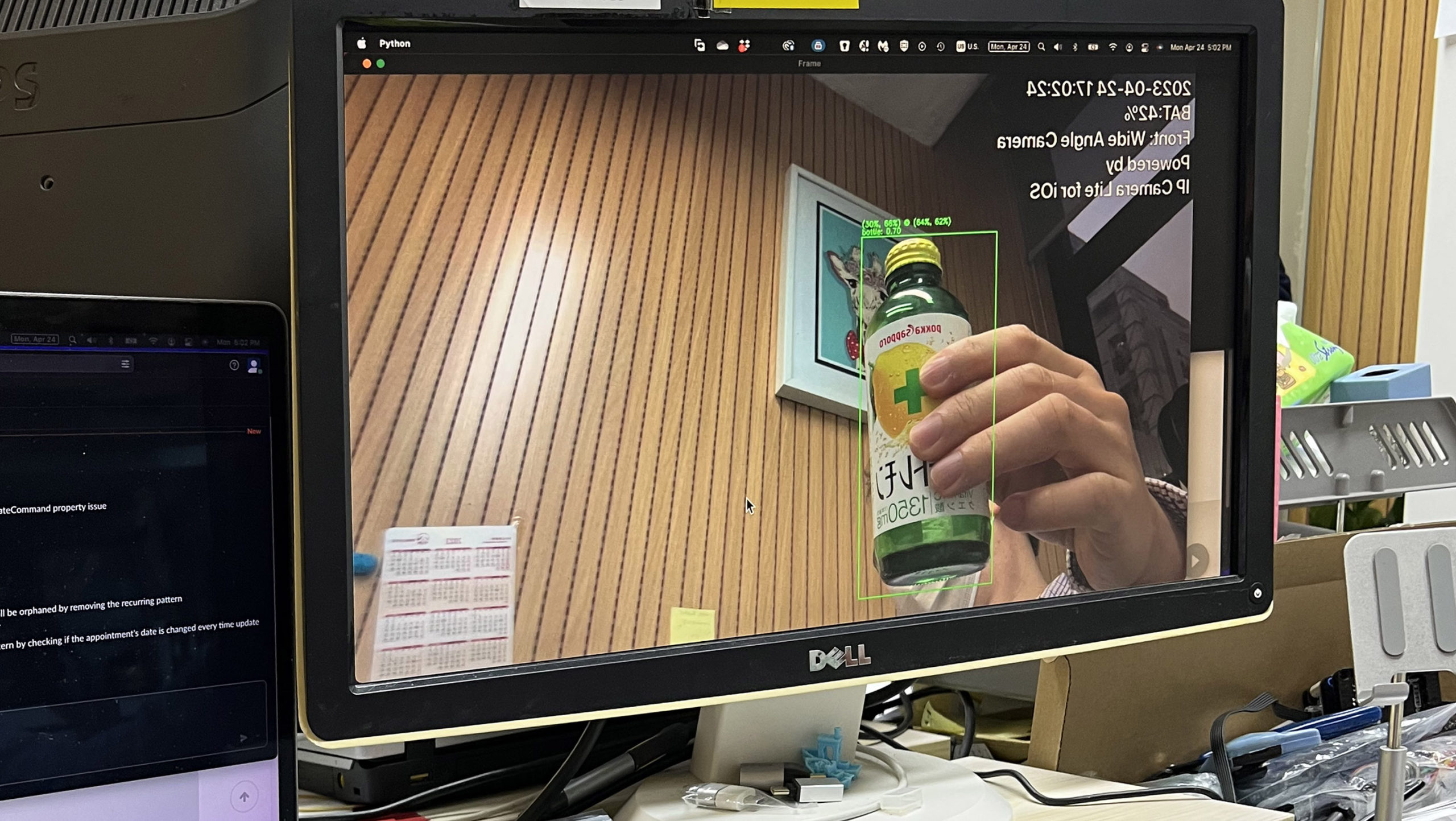

url = f"http://{VIRTUAL_SENSOR_IP_ADDRESS}/set?t=c&r={round(x_percent * 1024 / 100)}&g={round(w_percent * 1024 / 100)}&b={round(h_percent * 1024 / 100)}"

#print(f"Sending request to {url}")

requests.get(url)

obj_location = None

time.sleep(0.5)

# Load the pre-trained YOLOv8 model

model = YOLO("yolov8n.pt")

# Open a video capture object

cap = cv2.VideoCapture(0)

#cap = cv2.VideoCapture(VIDEO_STREAM_URL)

# Start the secondary thread to send HTTP requests

thread = threading.Thread(target=send_request)

thread.daemon = True

thread.start()

frameCount = 0

while True:

# Read a frame from the video capture

ret, frame = cap.read()

if not ret:

break

frameCount = frameCount + 1

if (frameCount % VIDEO_FRAMES_TO_SKIP) != 0:

#print( f"{frameCount} Skip" )

continue

(h,w) = frame.shape[:2]

frameCount = 0

# Flip the image so that it will display as a mirror image

flipped_frame = cv2.flip(frame, 1)

# Perform object detection

results = model.predict(flipped_frame)

# Iterate over the detected objects and draw the text on the bounding box

for box in results[0].boxes:

# Get the class label and confidence score

classification_index = box.cls

class_label = model.names[ int(classification_index) ]

confidence = box.conf[0]

# Draw the bounding box on the frame

obj = box.xyxy[0]

#if class_label=="bottle" and confidence > 0.5:

if confidence > 0.5:

cv2.rectangle(flipped_frame, (int(obj[0]), int(obj[1])), (int(obj[2]), int(obj[3])), (0, 255, 0), 2)

# calculate center_x, center_y

obj_center = (int((obj[0] + obj[2]) / 2), int((obj[1] + obj[3]) / 2))

obj_size = (int(obj[2] - obj[0]), int(obj[3] - obj[1]))

x_loc_percent = int((obj_center[0] / w) * 100)

y_loc_percent = int((obj_center[1] / h) * 100)

w_percent = int((obj_size[0] / h) * 100 ) # note that this is normalized as a % of the frame's height

h_percent = int((obj_size[1] / h) * 100 )

#print(x_loc_percent, y_loc_percent, w_percent, h_percent)

with lock:

obj_location = (x_loc_percent, y_loc_percent, w_percent, h_percent)

# Draw the text on the bounding box

text = f"{class_label}: {confidence:.2f}"

cv2.putText(flipped_frame, text, (int(obj[0]), int(obj[1]) - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

cv2.putText(flipped_frame, f"({w_percent}%, {h_percent}%) @ ({x_loc_percent}%, {y_loc_percent}%)", (int(obj[0]), int(obj[1]) - 20), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

# Show the output frame

cv2.imshow("Frame", flipped_frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release the video capture object and close all windows

cap.release()

cv2.destroyAllWindows()

![]()